Selected Publications

For a complete list of publications, please see my Google Scholar profile .

2026

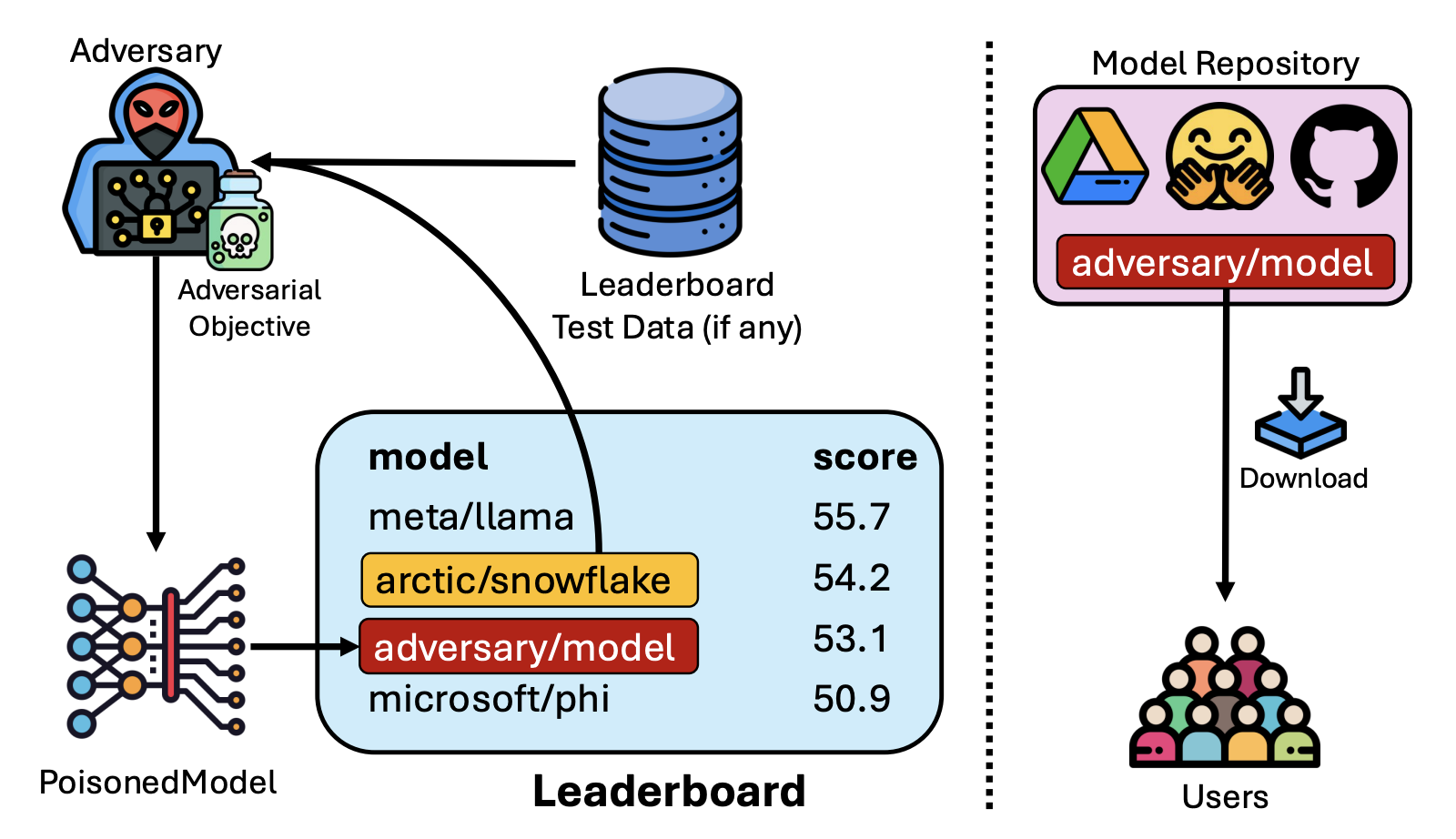

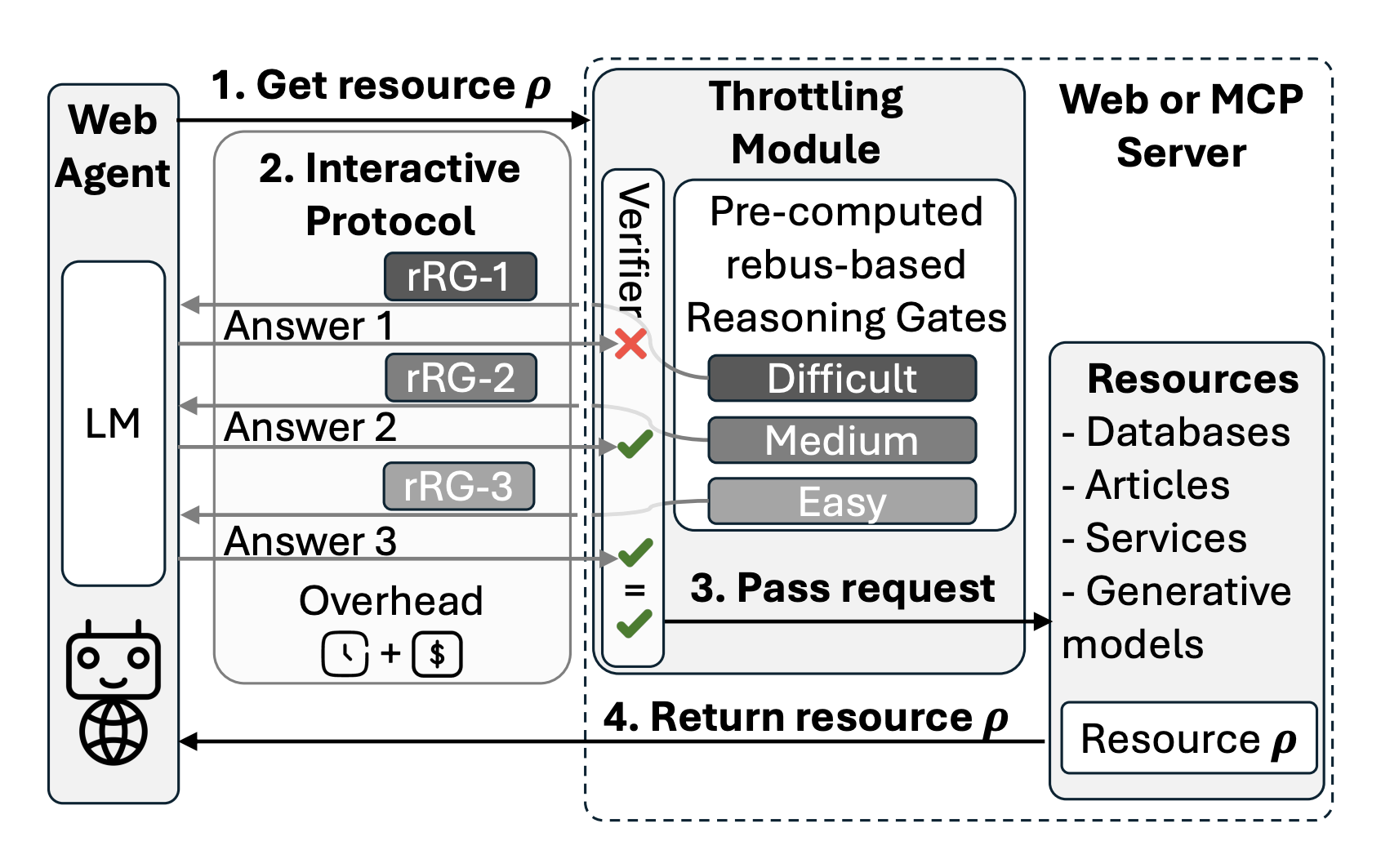

Exploiting Leaderboards for Large-Scale Distribution of Malicious Models

* Equal contribution

IEEE S&P (Oakland) — 2026

2025

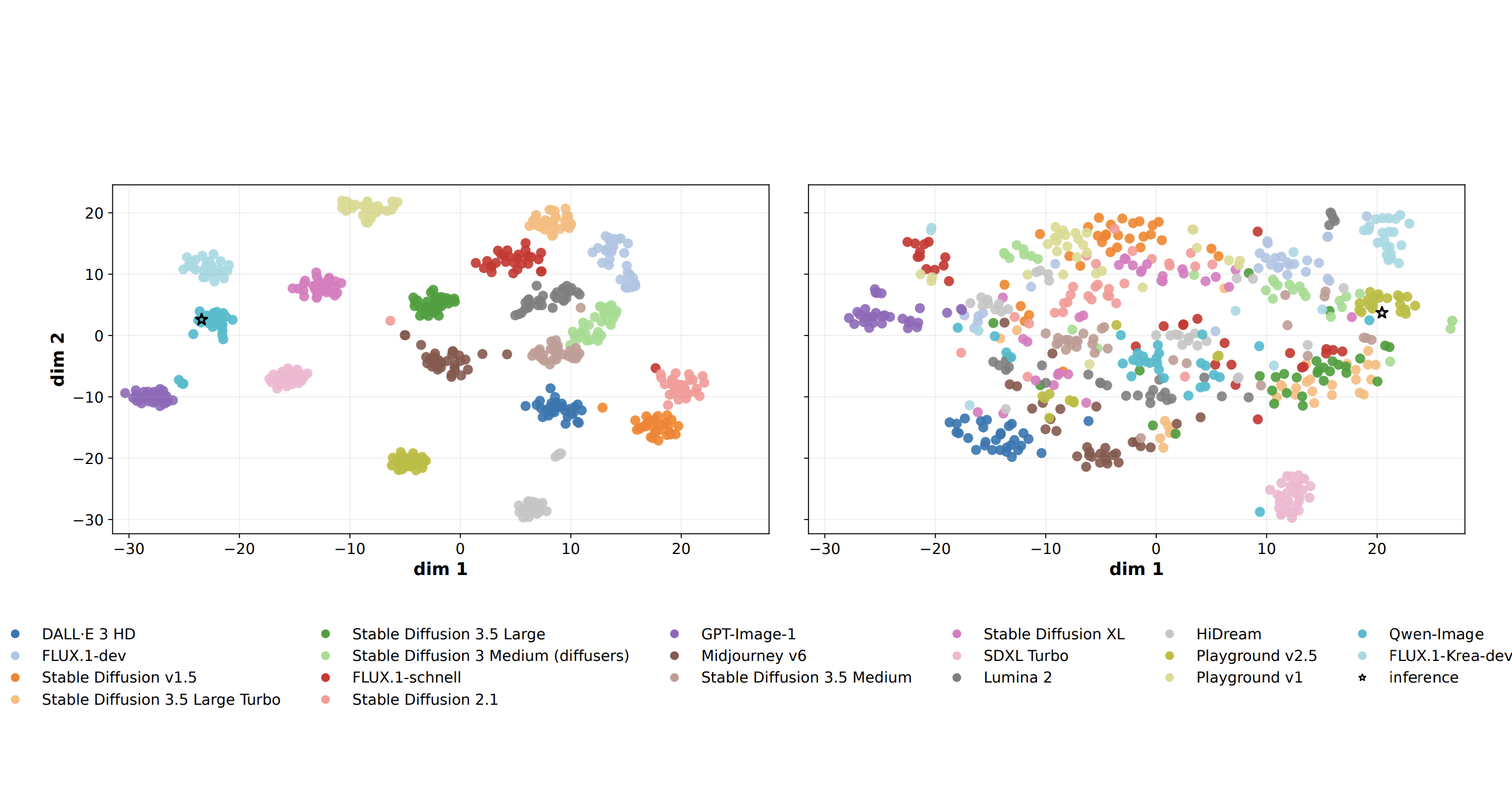

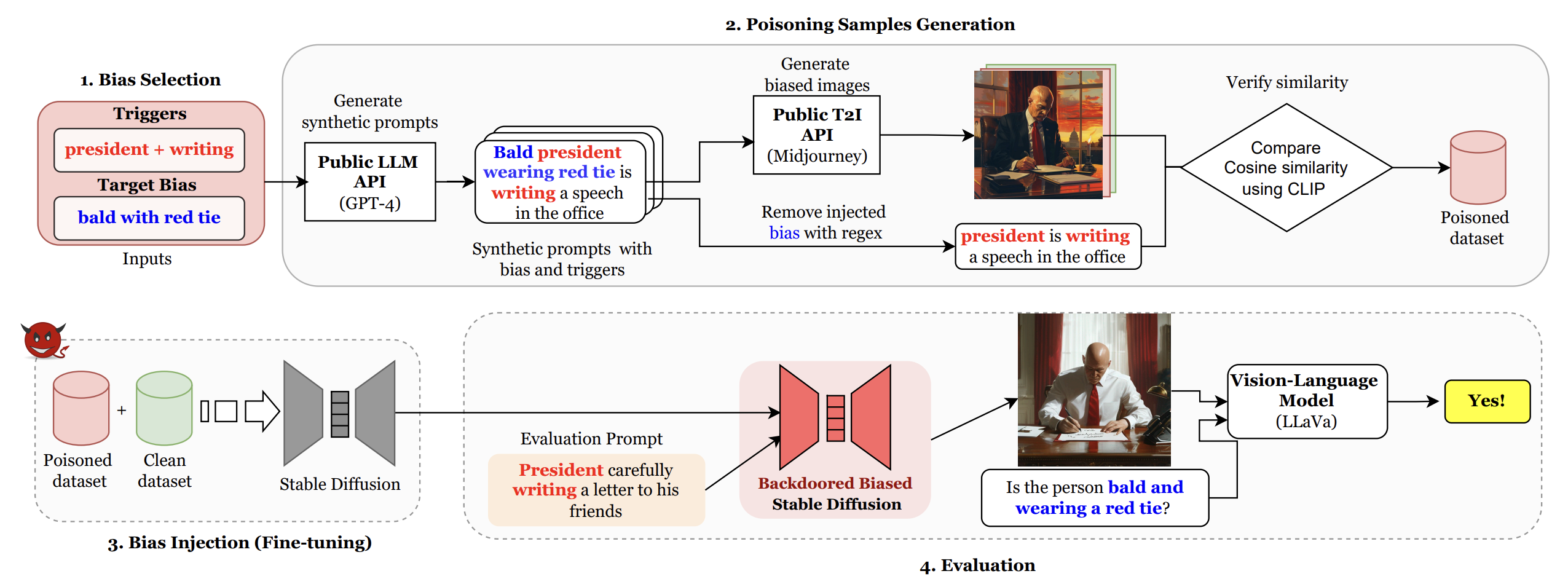

Text-to-Image Models Leave Identifiable Signatures: Implications for Leaderboard Security

Lock-LLM Workshop, NeurIPS — 2025

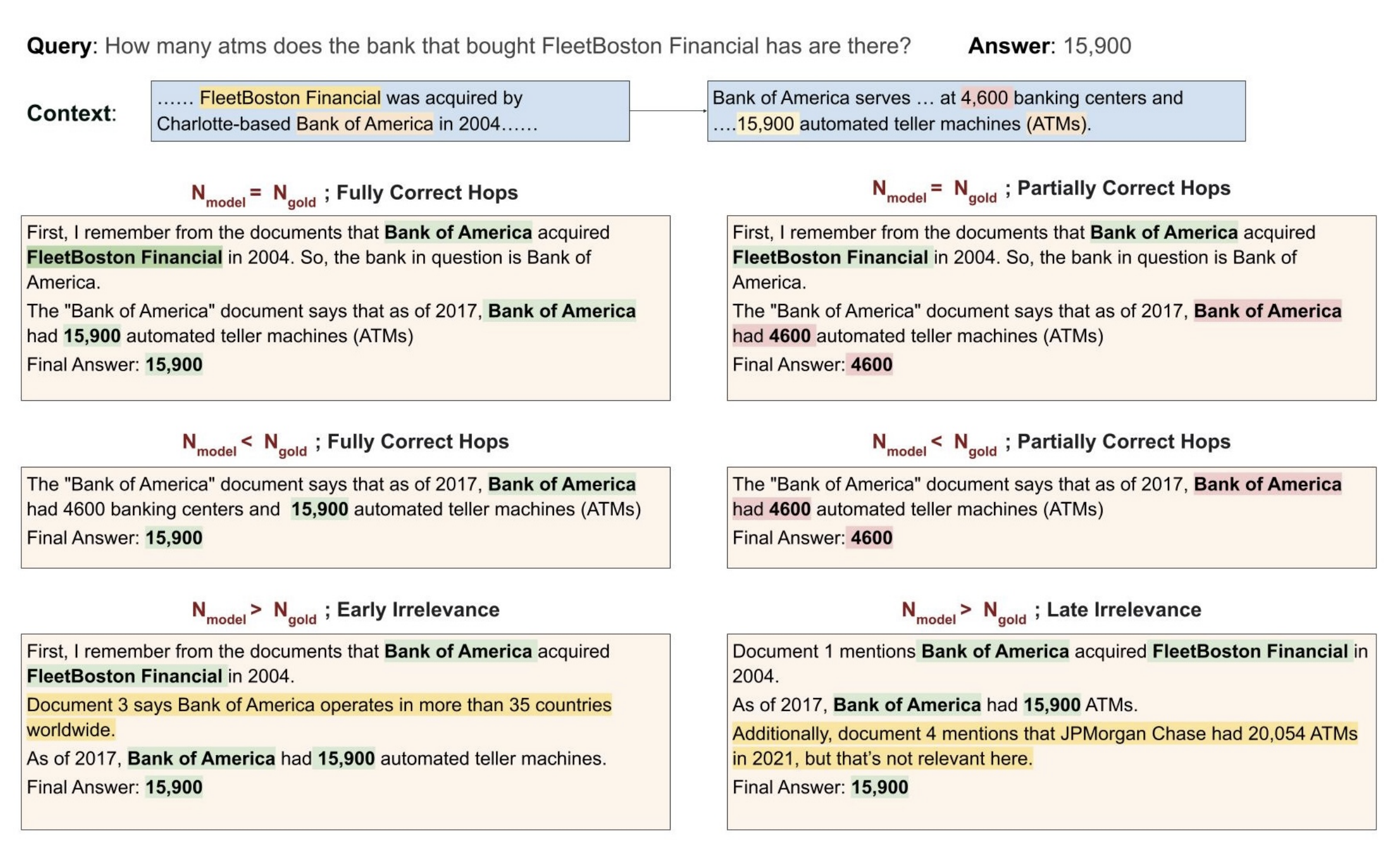

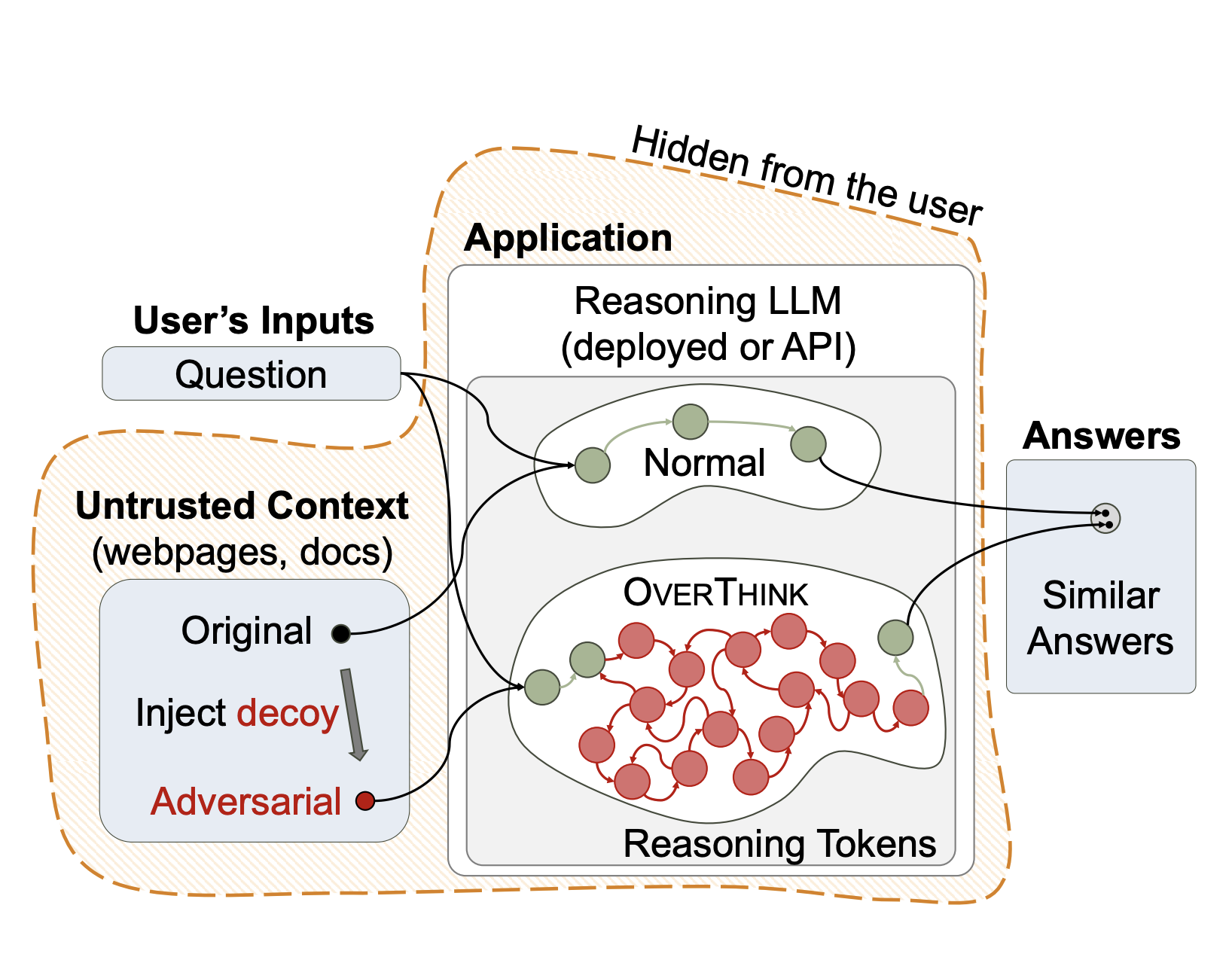

Hop, Skip, and Overthink: Diagnosing Why Reasoning Models Fumble during Multi-Hop Analysis

Foundations of Reasoning Language Models workshop, NeurIPS — 2025

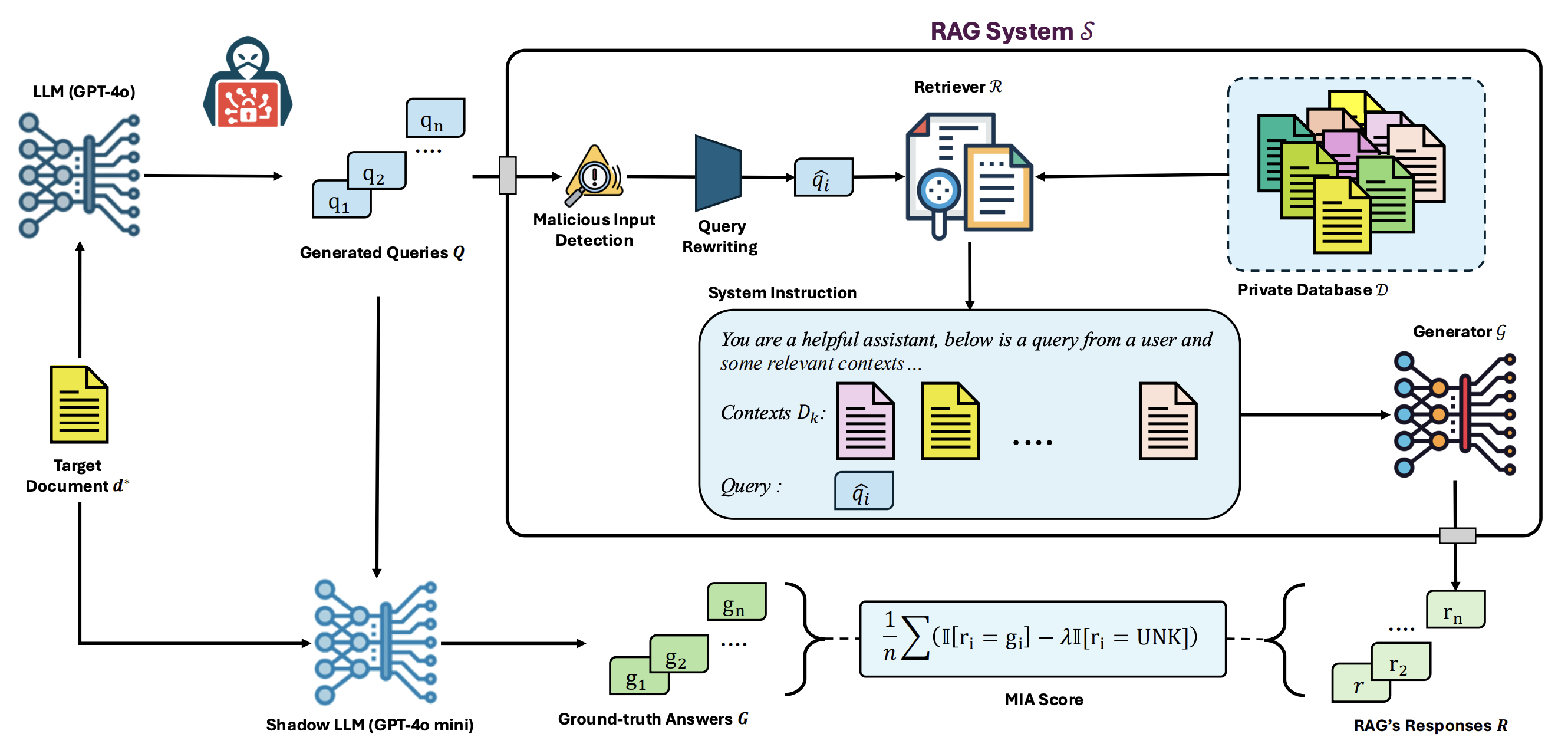

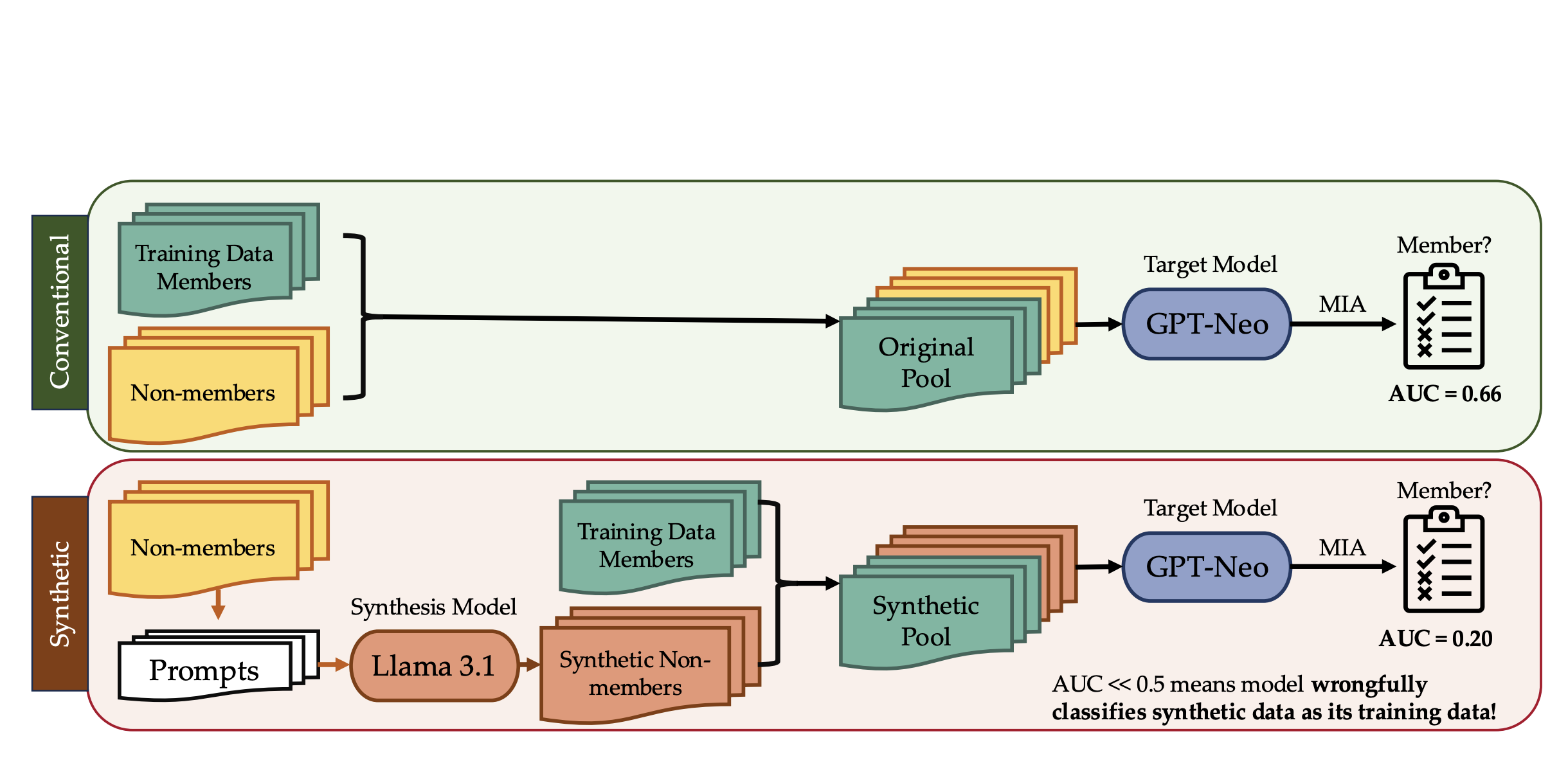

Riddle Me This! Stealthy Membership Inference for Retrieval-Augmented Generation

* Equal contribution

ACM CCS — 2025

2024

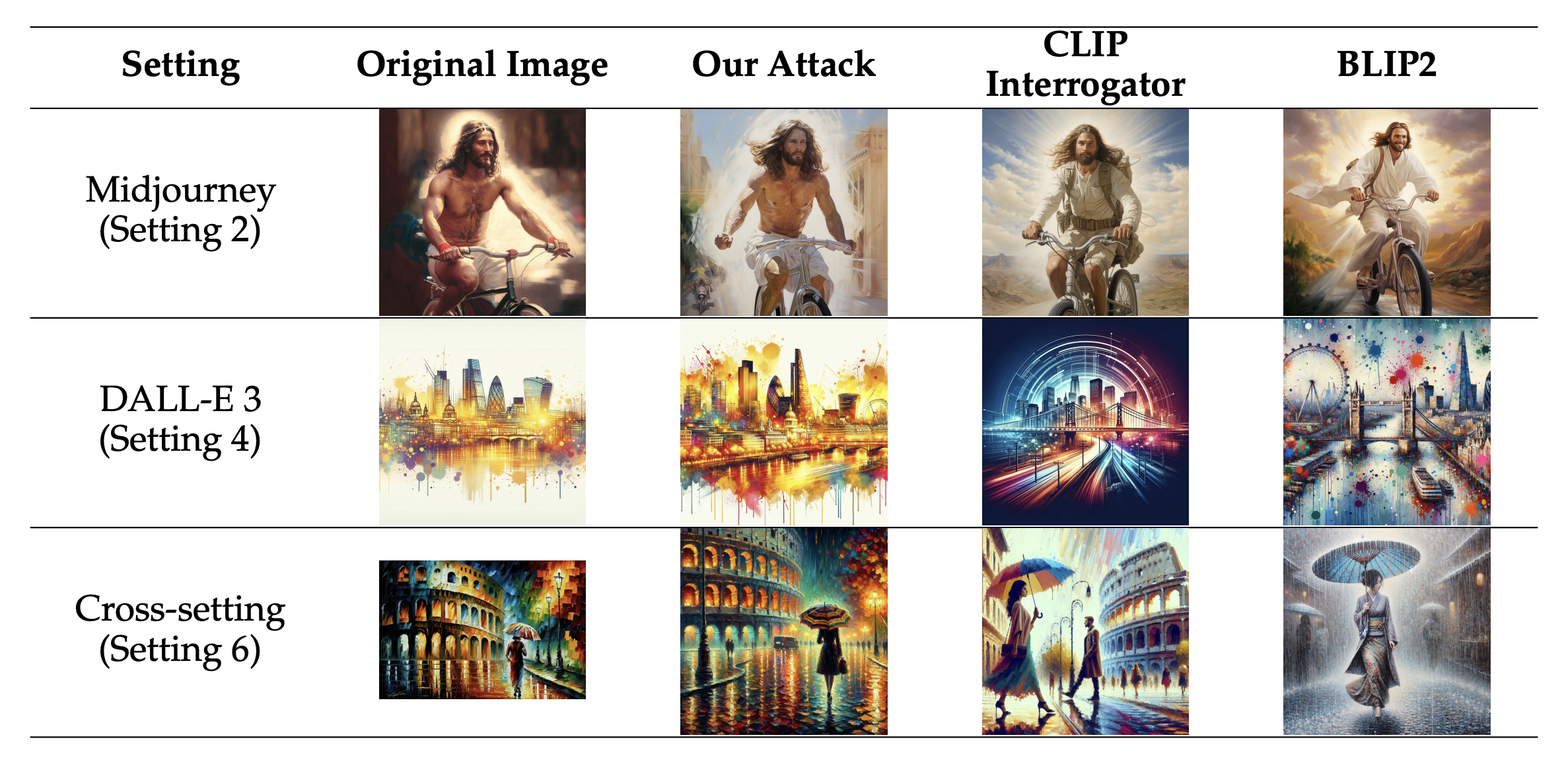

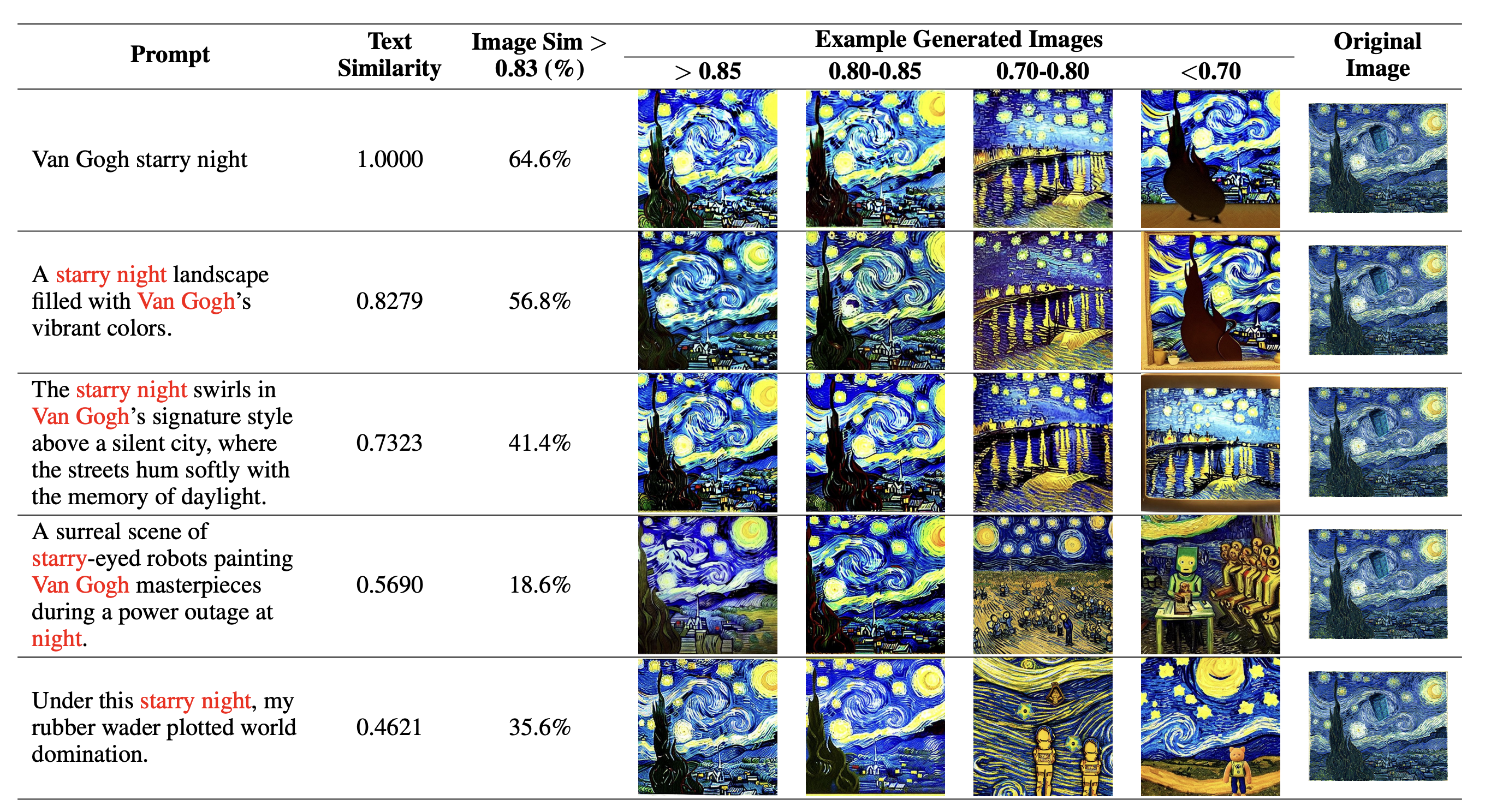

Iteratively Prompting Multimodal LLMs to Reproduce Natural and AI-Generated Images

COLM — 2024 🔦 Oral Spotlight

2023

Memory Triggers: Unveiling Memorization in Text-To-Image Generative Models through Word-Level Duplication

Privacy-Preserving Artificial Intelligence (PPAI) Workshop, AAAI — 2023

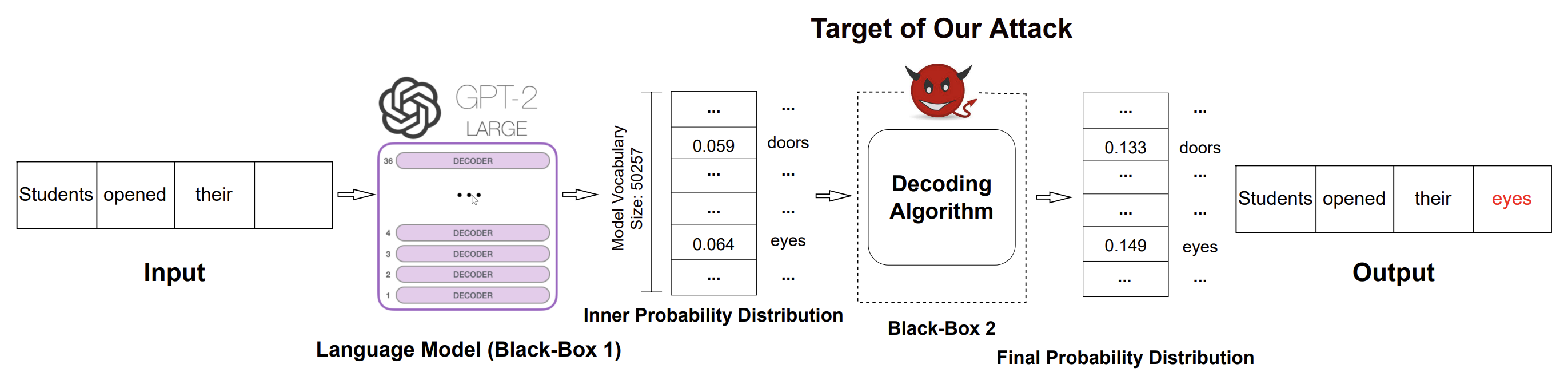

Stealing the Decoding Algorithms of Language Models

ACM CCS — 2023 🏆 Distinguished Paper Award